Complete user utterance and compromises with Alexa

(この記事の日本語版はこちら)

cheerio-the-bear.hatenablog.com

In the previous article below, I wrote that introducing a custom slot with no meaning sample utterance like 'a' is a kind of the best possible solution to get the complete user utterance with Alexa. I am writing this to state why it is not a perfect solution next.

cheerio-the-bear.hatenablog.com

- Issue 1. Unexpected match with the meaningless sample utterance

- Issue 2. Uncontrorable standard built-in intents

- Issue 3. Display interface cannot be enabled

Issue 1. Unexpected match with the meaningless sample utterance

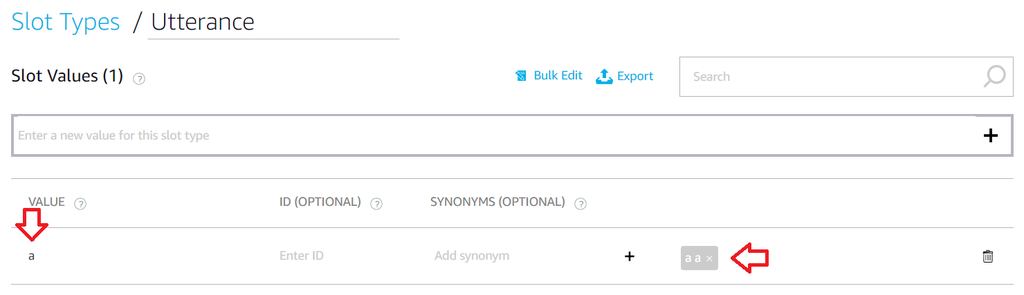

I did not think that the slot with my custom slot type sometimes gets 'a a' which is the meaningless synonim you can see in the following screenshot. I registered it to my custom slot type for getting complete user utterance. Of course, I did not say such a menaningless phrase, but it seems that Alexa sometimes recognize my utterance as 'a a'.

There is no way to get what user actually said in this case, so it is necessary to check the resolution status and implement some kind of workaround. In the following code snippet, 'utterance' is the slot that I applied my custom slot with the meaningless sample utterance and synonim. If the resolution status is 'ER_SUCCESS_MATCH', the propagated user utterance cannot be used unless user actually say 'a a'.

handle(handlerInput) { let utterance = handlerInput.requestEnvelope.request.intent.slots.utterance; if (utterance.resolutions && utterance.resolutions && utterance.resolutions.resolutionsPerAuthority && utterance.resolutions.resolutionsPerAuthority[0].status.code === 'ER_SUCCESS_MATCH') { // Unfortunately the user utterance was undexpectedly matched // with the meaningless synonim.

Issue 2. Uncontrorable standard built-in intents

Not only the custom-slot solution but also all other possible solutions for getting complete user utterance have the same issue if some standard built-in intents are also used in the same skill. The problem is that the standard built-in intents are always prioritized regardless of my skill's context and take user utterance.

In my case, I had to utilize AMAZON.YesIntent and AMAZON.NoIntent only for a question in the beginning of the conversation with user. I want to get the user utterance 'good' from my custom slot, but my skill does not receive my custom intent with user utterance but receive AMAZON.YesIntent instead. AMAZON.YesIntent does not propagate actual user utterance, so there is no way to know what was it. It might be 'yes', but it might be 'good'.

Issue 3. Display interface cannot be enabled

Actually I wanted to show some messages to user if user's device has display. I implemented some code to support display, turned on the display interface, then turned off it soon as I noticed that it is not a good idea to support the display interface if I want to get complete user utterance somehow.

The reason why I turned off the display interface and gave up to show messages to user is related to the previous issue. Please see the following screenshot that shows a lot of standard built-in intents added if we turn on the display interface. It is easy to imagine that a lot of words and phrases like "more", "next", "page up" and so on will not be propagated to my skill as they are but standard built-in intents will come to my skill instead. I prefer to receive the user utterance 'more' as 'more', not as AMAZON.MoreIntent.

Is there any way to disable or unprioritize these standard built-in intents?